Even after Google fixes its large language model (LLM) and gets Gemini back online, the generative AI (genAI) tool may not always be reliable — especially when generating images or text about current events, evolving news, or hot-button topics.

“It will make mistakes,” the company wrote in a mea culpa posted last week. “As we’ve said from the beginning, hallucinations are a known challenge with all LLMs — there are instances where the AI just gets things wrong. This is something that we’re constantly working on improving.”

Prabhakar Raghavan, Google’s senior vice president of knowledge and information, explained why, after only three weeks, the company was forced to shut down the genAI-based image generation feature in Gemini to “fix it.”

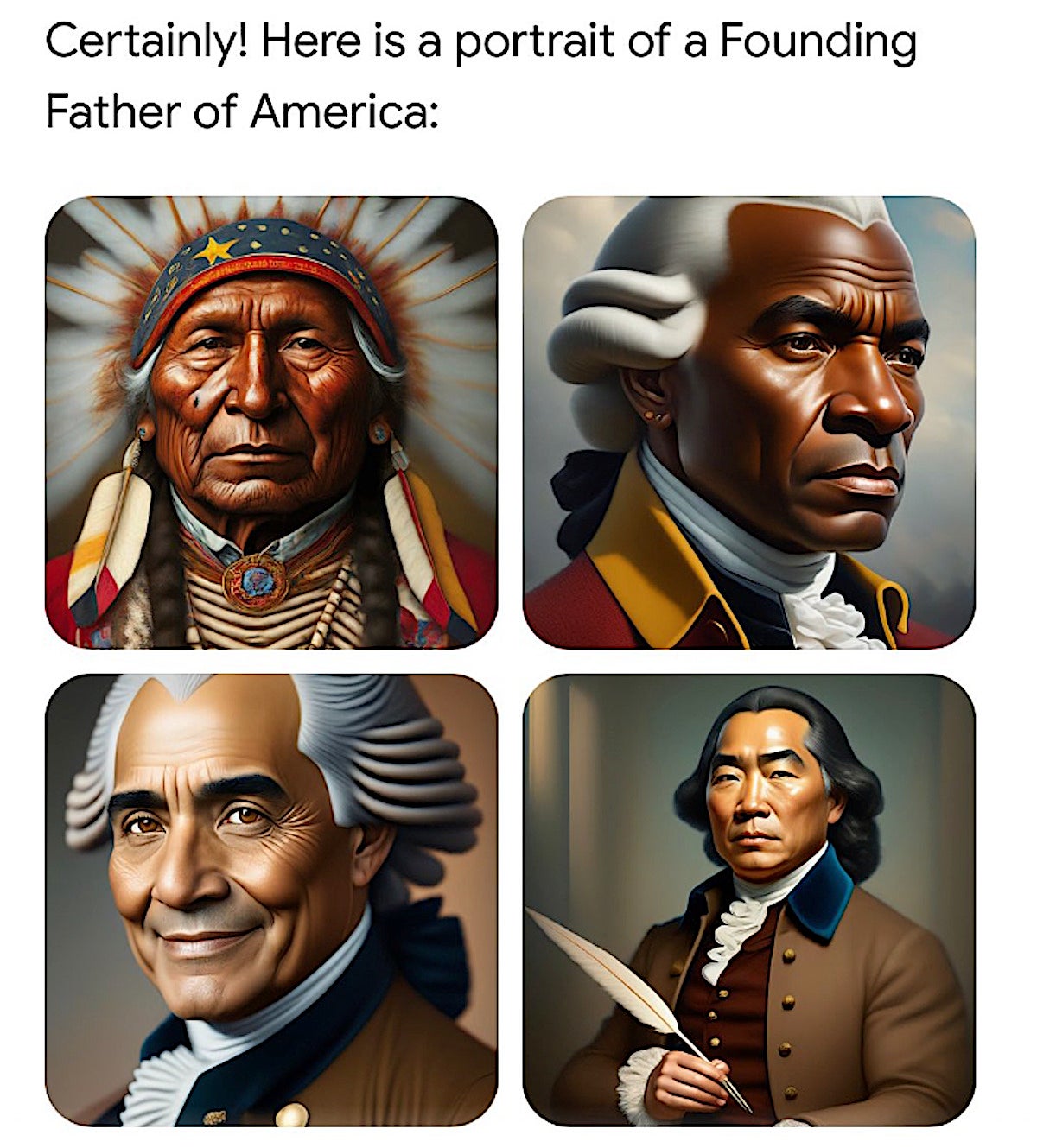

Simply put, Google’s genAI engine was taking user text prompts and creating images that were clearly biased toward a certain sociopolitical view. For example, user text prompts for images of Nazis generated Black and Asian Nazis. When asked to draw a picture of the Pope, Gemini responded by creating an Asian, female Pope and a Black Pope.

Asked to create an image of a medieval knight, Gemini spit out images of Asian, Black and female knights.

Frank Talk

“It’s clear that this feature missed the mark,” Raghavan wrote in his blog. “Some of the images generated are inaccurate or even offensive.”

That any genAI has problems with both biased responses and outright “hallucinations” — where it goes off the rails and creates fanciful responses — is not new. After all, genAI is little more than a next-word, image, or code predictor and the tech relies on whatever information has already been fed into its model to guess what comes next.

What is somewhat surprising to researchers, industry analysts and others is that Google, one of the earliest developers of the technology, had not properly vetted Gemini before it went live.

What went wrong?

Subodha Kumar, a professor of statistics, operations, and data science at Temple University, said Google created two LLMs for natural-language processing: PaLM and LaMDA. LaMDA has 137 billion parameters, PaLM has 540 billion, surpassing OpenAI’s GPT-3.5, which has 175 billion parameters and trains ChatGPT.

“Google’s strategy was high-risk, high-return strategy,” Kumar said. “…They were confident to release their product, because they were working on it for several years. However, they were over-optimistic and missed some obvious things.”

“Although LaMDA has been heralded as a game-changer in the field of Natural Language Processing (NLP), there are many alternatives with some differences and similarities, e.g., Microsoft Copilot and GitHub Copilot, or even ChatGPT,” he said. “They all have some of these problems.”

Because genAI platforms are created by human beings, none will be without biases, “at least in the near future,” Kumar said. “More general-purpose platforms will have more biases. We may see the…

2024-03-05 17:00:03

Post from www.computerworld.com