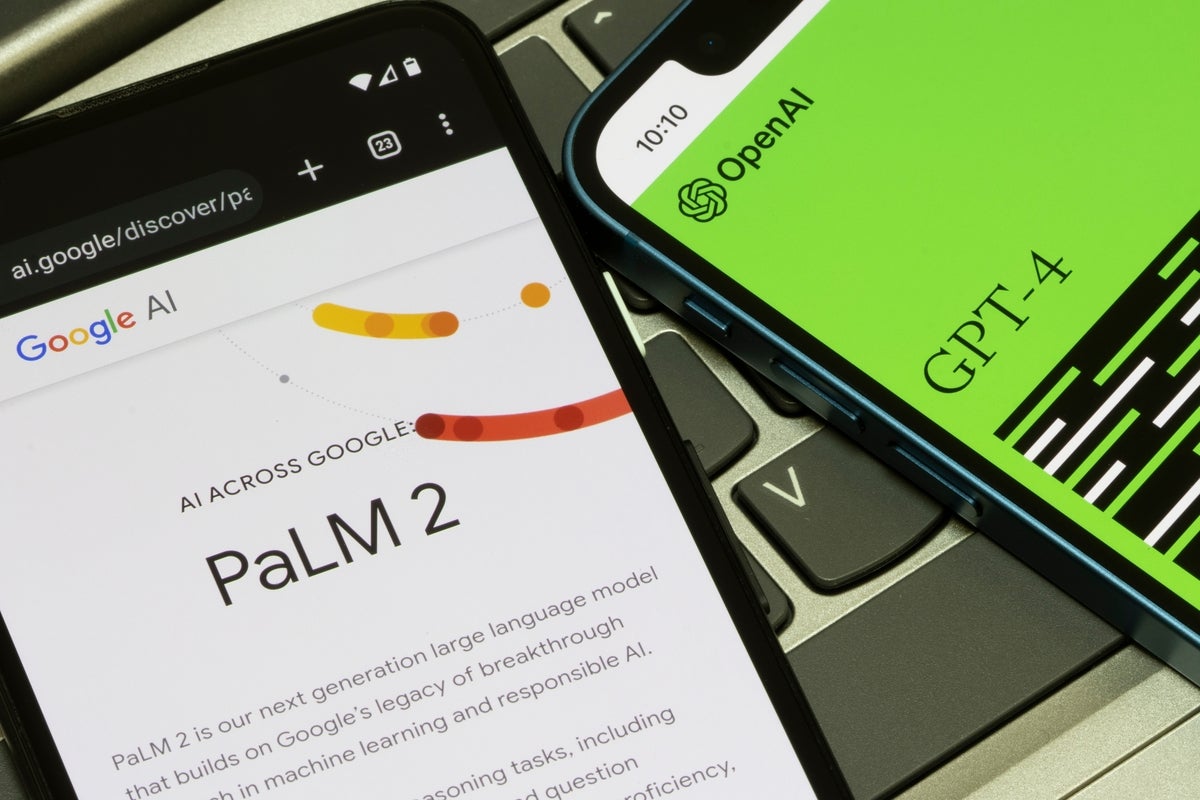

When ChatGPT was introduced in November 2022, it revolutionized the concept of using generative artificial intelligence (AI) for automating tasks, generating creative ideas, and coding software. Whether it’s summarizing emails, enhancing resumes, or brainstorming marketing campaigns, AI-powered chatbots like OpenAI’s ChatGPT and Google’s Bard are here to help.

ChatGPT, short for chatbot generative pre-trained transformer, is built on the GPT large language model (LLM), which processes natural language inputs and predicts the next word based on context. In essence, LLMs are next-word prediction engines, and they are gaining popularity with open-source models like Google’s LaMDA, Hugging Face’s BLOOM, and Nvidia’s NeMO.

These LLMs are trained on vast amounts of data, including articles, books, and internet resources, to produce human-like responses to queries. However, the trend is shifting towards customizing LLMs for specific uses, leading to the development of more advanced models like Google’s PaLM 2, which uses significantly more training data for advanced tasks.

Training LLMs requires substantial compute power, and the process involves inputting data into the model to predict the next word. This data can be proprietary corporate information or publicly available content scraped from the internet.

2024-02-08 17:00:03

Original from www.computerworld.com