Microsoft has announced the next of its suite of smaller, more nimble artificial intelligence (AI) models targeted at more specific use cases.

Earlier this month, Microsoft unveiled Phi-1, the first of what it calls small language models (SLMs); they have far fewer parameters than their large language model (LLM) predecessor. For example, the GPT-3 LLM — the basis for ChatGPT — has 175 billion parameters. GPT-4, OpenAI’s latest LLM, has about 1.7 trillion parameters. Phi-1 was followed by Phi-1.5, which by comparison, has 1.3 billion parameters.

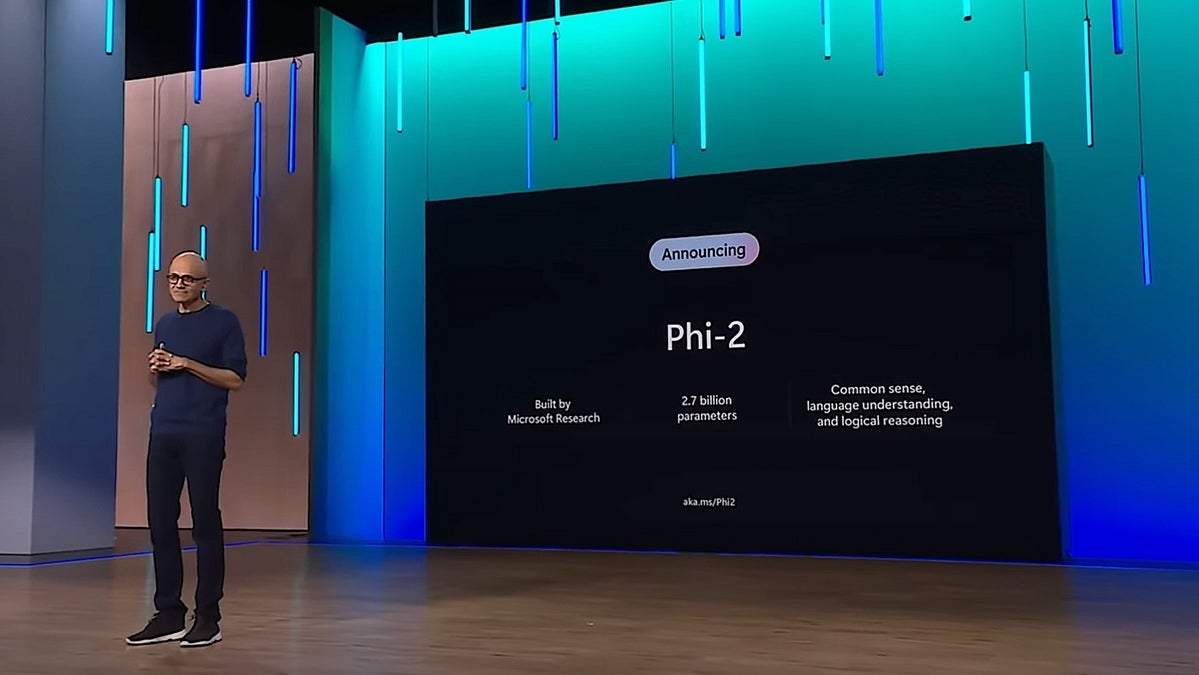

Phi-2 is a 2.7 billion-parameter language model that the company claims can outperform LLMs up to 25 times larger.

Microsoft is a major stock holder and partner with OpenAI, the developer of ChatGPT, which was launched a little more than a year ago. Microsoft uses ChatGPT as the basis for its Copilot generative AI assistant.

LLMs used for generative AI (genAI) applications such as chatGPT or Bard can consume vast amounts of processor cycles and be costly and time-consuming to train for specific use cases because of their size. Smaller, more industry- or business-focused models can often provide better results tailored to business needs.

“Sooner or later, scaling of GPU chips will fail to keep up with increases in model size,” said Avivah Litan, a vice president distinguished analyst with Gartner Research. “So, continuing to make models bigger and bigger is not a viable option.”

Currently, there’s a growing trend to shrink LLMs to make them more affordable and capable of being trained for domain-specific tasks, such as online chatbots for financial services clients or genAI applications that can summarize electronic healthcare records.

Smaller, more domain specific language models trained on targeted data will eventually challenge the dominance of today’s leading LLMs, including OpenAI’s GPT 4, Meta AI’s LLaMA 2, or Google’s PaLM 2.

Dan Diasio, Ernst & Young’s Global Artificial Intelligence Consulting Leader, noted that there’s currently a backlog of GPU orders. A chip shortage not only creates problems for tech firms making LLMs, but also for user companies seeking to tweak models or build their own proprietary LLMs.

“As a result, the costs of fine-tuning and building a specialized corporate LLM are quite high, thus driving the trend towards knowledge enhancement packs and building libraries of prompts that contain specialized knowledge,” Diasio said.

With its compact size, Microsoft is pitching Phi-2 as an “ideal playground for researchers,” including for exploration around mechanistic interpretability, safety improvements, or fine-tuning experimentation on a variety of tasks. Phi-2 is available in the Azure AI Studio model catalog.

“If we want AI to be adopted by every business — not just the billion-pound multinationals — then it needs to be cost-effective, according to Victor Botev, former…

2023-12-19 07:41:02

Post from www.computerworld.com rnrn