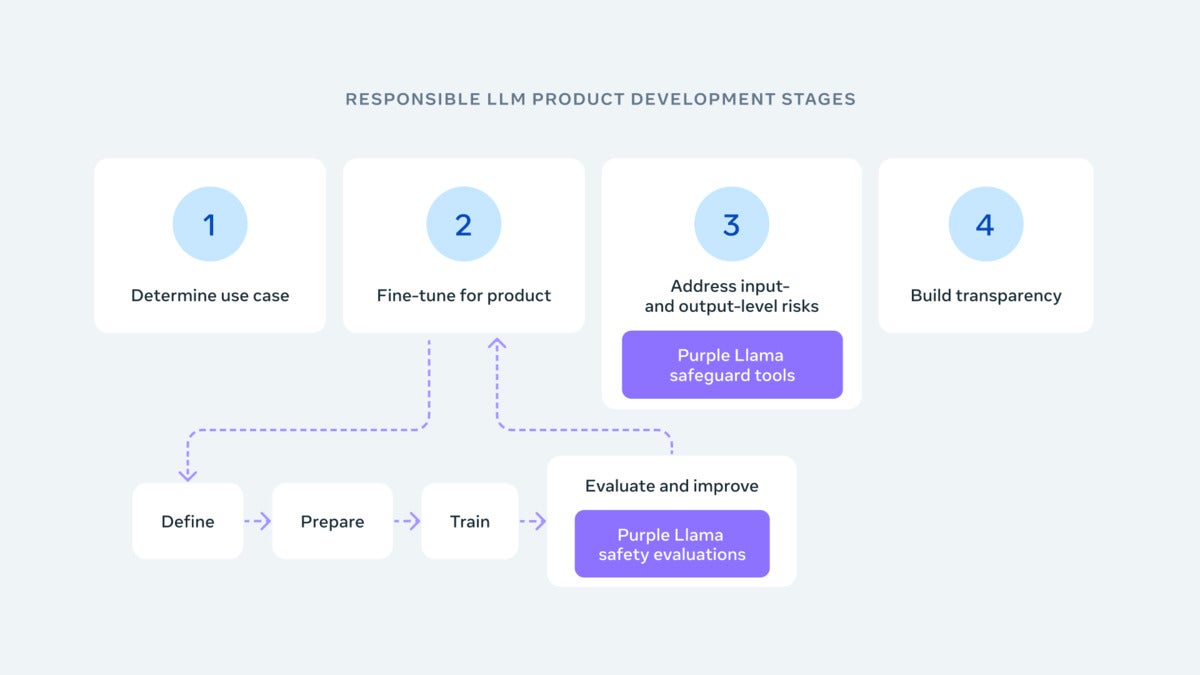

Meta has introduced Purple Llama, a project dedicated to creating open-source tools for developers to evaluate and boost the trustworthiness and safety of generative AI models before they are used publicly.

Meta emphasized the need for collaborative efforts in ensuring AI safety, stating that AI challenges cannot be tackled in isolation. The company said the goal of Purple Llama is to establish a shared foundation for developing safer genAI as concerns mount about large language models and other AI technologies.

“The people building AI systems can’t address the challenges of AI in a vacuum, which is why we want to level the playing field and create a center of mass for open trust and safety,” Meta wrote in a blog post.

Gareth Lindahl-Wise, Chief Information Security Officer at the cybersecurity firm Ontinue, called Purple Llama “a positive and proactive” step towards safer AI.

“There will undoubtedly be some claims of virtue signaling or ulterior motives in gathering development onto a platform – but in reality, better ‘out of the box’ consumer-level protection is going to be beneficial,” he added. “Entities with stringent internal, customer, or regulatory obligations will, of course, still need to follow robust evaluations, undoubtedly over and above the offering from Meta, but anything that can help reign in the potential Wild West is good for the ecosystem.”

The project involves partnerships with AI developers; cloud services like AWS and Google Cloud; semiconductor companies such as Intel, AMD, and Nvidia; and software firms including Microsoft. The collaboration aims to produce tools for both research and commercial use to test AI models’ capabilities and identify safety risks.

The first set of tools released through Purple Llama includes CyberSecEval, which assesses cybersecurity risks in AI-generated software. It features a language model that identifies inappropriate or harmful text, including discussions of violence or illegal activities. Developers can use CyberSecEval to test if their AI models are prone to creating insecure code or aiding cyberattacks. Meta’s research has found that large language models often suggest vulnerable code, highlighting the importance of continuous testing and improvement for AI security.

Llama Guard is another tool in this suite, a large language model trained to identify potentially harmful or offensive language. Developers can use Llama Guard to test if their models produce or accept unsafe content, helping to filter out prompts that might lead to inappropriate outputs.

Next read this:

The best open source software of 2023

Do programming certifications still matter?

Cloud…

2023-12-08 16:41:04

Link from www.infoworld.com rnrn